Many times throughout our lives we may have heard a statement telling us that any event, however improbable, may eventually happen if we give it enough time to occur, or if the places in which such an event can take place are numerous enough (or both.) This is the same argument that has been used to defend the possibility of extraterrestrial life somewhere out there in the cosmos, and which has been used to justify enormous expenditures in the search for signs of life outside planet Earth, such as the SETI (

Search for Extra Terrestrial Intelligence) project or the unmanned missions to Mars (this hope for some kind of intelligent life to exist or to have existed in other parts of the galaxy or the Universe besides planet Earth may be subconsciously motivated by a justified fear that we may be alone in the Universe and that there may be no other forms of intelligent life in the cosmos besides ourselves, a possibility which may be too hard for many people to swallow.)

Regardless of whether we are completely alone in the Universe or whether we actually have a lot of company out there of which we are unaware of, our existence in this planet has already proven that, as far as this Universe is concerned, at some point in its evolution the Universe was capable of coming up with the conditions which made it possible for the appearance of

Homo Sapiens, intelligent life.

Before going any further, let us carry out a simple practical experiment in order to get an idea on how easy or how difficult it would be to obtain, by random chance alone, something that we know beforehand was produced by intelligent life. The reader may wish to take part in this experiment, which was designed for his/her entertainment.

To begin with, we must write in separate (but equal) pieces of paper the individual letters of a simple word such as “HOUSE” (the reader may wish to use his/her first name, such as “DANIEL” or “SYLVIA”). The next step is to throw the pieces of paper into an empty box or container and shuffle the box around in such a way that we have no idea were each individual letter is located. Once this has been done, the reader will take out from the box one of the pieces of paper blindly, and since the pieces of paper have the same size and shape, there is no way of telling from the outside without looking directly at them which one of them we are actually pulling out. Using more precise terminology, we state that each piece of paper has the same chance of being taken out from the box as the other ones. In the case of the word “HOUSE”, each letter has a probability of one fifth (or 20%) of being taken out individually from the box. Once the first piece of paper has been taken out of the box, the reader will place the letter in some flat surface to start forming the word “HOUSE” and will start a count of one. If the letter was the letter “H”, the reader will proceed to take out the next piece of paper, which in case of being the letter “O” will be used to continue forming the word “HOUSE”, and the count will increase to two. But if the letter was not the letter “O”, the reader will place back into the box both letters and will start all over again. However, the count, which is now two, will keep increasing with the next trial. The purpose of the game is to continue drawing letters from the box and putting all of them back into the box unless we start getting the right order to compose the word “HOUSE”, and all the while the count will keep on increasing. The game ends when the word “HOUSE” is finally completed, at which point we will have a tally with the total number of times we have tried in vain to obtain such word. We may get extremely lucky and start picking out each letter in the correct order during our first attempt, in which case when we hit the right combination we will have a tally of one. However, if the experiment is repeated, it becomes unlikely that we will again get a tally of one, and it is very possible that we may have to try dozens of times before we hit again on the right combination. If the experiment is repeated a third time, the odds of hitting the right combination three times in a row become increasingly small. These experimental observations can actually be put into a mathematical framework and we can estimate, in the long run, what the probability is of getting the right combination in a large number of trials. The line of though is as follows:

In the word “HOUSE”, there are five different letters. Thus, there are five different ways in which we can pick out the first letter of the word (and only one of them will be the right one, the letter “H”). Once we have picked out the first letter, there are only four letters remaining in the box, and we can pick out the second letter in any of four different ways. Thus, we can pick out the first two letters in 5 • 4 = 20 different ways (and only one combination will be the right one, the pair of letters “H” and “O” in that order). Extending this argument, it is obvious that we can pick out the five letters from the box in a total of

5 • 4 • 3 • 2 • 1 = 120 different ways

But there is only one way of picking each letter after the other which will be the “right one”, in the correct order to form the word “HOUSE”. By the very definition of probability, if an event can happen n times out of a total of N possibilities, then the probability of that event taking place in the long run will be n/N. Thus, the probability of getting correctly the word “HOUSE” will be 1/120 or 0.0083, or 0.83%. In other words, if we repeat our experiment several times, then as the odds even out we will find that, on the average, we will be picking out the right combination less than one percent of the time.

If the word has more than six letters, the average reader may quickly become dismayed and may begin to realize that it is not an easy task to come up with the right combination of letters to form such word if the letters are picked out entirely at random.

If instead of trying to form a simple word we attempt to compose a phrase (for which we would require at least one blank piece of paper inside the box representing the space used between two different words), the reader can easily imagine that getting such a phrase out of a random combination of letters would be a very difficult and time consuming task.

We are now ready to pose a more interesting question: If we have a box that is big enough to contain a large number of letters from the alphabet, say some ten million letters, what then is the probability of getting, just by drawing the letters out from the box on an entirely random basis, a work such as Shakespeare’s Hamlet?

Most readers, including many skeptics, will agree that the odds of such an event taking place are, for all practical purposes, nearly zero, and we would not expect to be witnesses to such a miracle within our lifetimes. After reading a work such as Hamlet, the average reader will come to the unavoidable conclusion that such work could not have been the result of a combination of thousands upon thousands of letters being poured out entirely at random, and a being possessing a fair amount of intelligence (and knowledge) had to be the creator of such work. However, and it is important to emphasize this, according to the rules of probability there is some chance, however small, of such an event taking place. Can a work such as Hamlet eventually be produced on an entirely random basis by a computer churning out billions upon billions of combinations of letters per second, given enough time to accomplish such task? Absolutely. It is indeed possible. However, it is highly improbable. It is imperative not to confuse what is possible with what is probable. An event that is possible may have a probability of taking place that is so small that even with an ultra fast computer working since the creation of the Universe would still be in the works waiting to happen. And we know for a fact that Shakespeare’s mind was not an ultra fast computer and that his life span was certainly much, much smaller than the known age of the Universe.

On a random universe, the only way in which there can be any hopes of making a very small probability have any meaning is by resorting to the sheer brute power of large numbers. For example, if there is a black marble lost inside a big box containing about one thousand white marbles, with all of the marbles thoroughly mixed, then the probability of blindly grabbing at random the black marble from the box the first time we get our hand inside the box will be precisely one in a thousand. After putting back in the box the black marble and mixing the marbles thoroughly, if we get a second chance to draw out the black marble we still have a very small probability of drawing out the black marble. However, if we repeat the experiment one million times, then our common sense tells us that at least on one occasion, probably more, we should have drawn out the black marble. After all, the box only contains one thousand white marbles. If after one million repetitions we still have not drawn any black marble from the box, then we may begin to suspect that either we have been among the unluckiest inhabitants there have ever been on this side of the galaxy, or most likely the box never did contain any black marble. Thus, even though the probability of drawing the black marble may be small, the brute force approach of resorting to large numbers (in this case, by increasing the number of trials) makes that probability increase enough to make the event an anticipated event that is due to happen sooner or later, instead of an event unlikely to happen. In order to make the case even stronger, we will analyze the problem of the black marble lost inside a box under a mathematical perspective. We will assume that the box has exactly 999 white marbles and one black marble. We will also assume that after each trial the marble we may have gotten will be put back into the box so as not to deplete the number of marbles inside the box (besides making the mathematics easier to handle). As we already said, if we carry out the experiment only once, then the probability of getting the black marble will be one in one thousand, or 0.001, or 0.1%. A probability of one-tenth of one percent is barely something any wily gambler would bet on. What if we deposit the first marble back into the box and try our luck for a second time? If we call P1 the probability that we may get the black marble during the first trial, P2 the probability that we may get the black marble during the second trial, and P1P2 the probability that we may get the black marble on both occasions, then it can be proven that the probability P that we may get the black marble at least once in those two trials is given by the expression:

P = P1 + P2- P1P2

P = 0.001 + 0.001 – (0.001)(0.001)

P = 0.001999

And with two trials, our odds of getting that black marble have doubled to two-tenths of one percent. This is still a very small probability. What if we make three attempts? In that case, it can be proven that, if we call P1 the probability that we may get the black marble during the first trial, P2 the probability that we may get the black marble during the second trial, P3 the probability that we may get the black marble during the third trial, P1P2 the probability that we may get the black marble on the first two trials, P2P3 the probability that we may get the black marble on the last two trials, P1P3 the probability that we may get the black marble on the first trial and the last trial, and P1P2P3 the probability that we may get the black marble on all three occasions, then it can also be proven that the probability P that we may get the black marble at least once in those three trials is given by the expression:

P = P1 + P2 + P3 - P1P2 – P2P3 – P1P3 + P1P2P3

P = 0.001 + 0.001 + 0.001 – (0.001)(0.001) – (0.001)(0.001) – (0.001)(0.001) + …

… (0.001)(0.001)(0.001)

P = 0.002997001

With three trials, the odds of getting our hands on that black marble have almost tripled to about three-tenths of one percent. Enticed by our rising expectations, we would like to make a bigger number of trials; say, ten trials. But an extension of the above formulas is out of the question, since the resulting formulae become extremely cumbersome to handle, and we must resort to heavier mathematical artillery. It can be formally proven that, if we call q the probability that on any given trial we will not pick out the black marble, then the probability P that we will pick out the black marble at least once in a number N of trials will be given by the expression:

P = 1 - qN

Thus, if we carry out three different attempts to draw out the black marble, the odds of getting it will be:

P = 1 - (0.999)3

P = 0.002997001

and, as expected, the answer checks out with the result we got before.

For ten trials, the probability of drawing out at least once the black marble is:

P = 1 - (0.999)10

P = 0.009955119

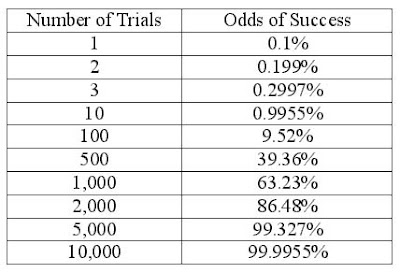

The odds are now almost one percent. And if we keep increasing the number of trials, the odds get even better, as can be seen from the following table:

After five hundred trials, we have more than one chance in out of three that we will have picked up that black marble. After one thousand trials, we have a chance greater than fifty-fifty that we will have found the black marble. Notice that the odds begin to turn in our favor when the number of trials approaches or exceeds the total number of different ways in which the black marble may be obtained from the box (one thousand different ways in this case, since there are one thousand marbles inside the box). And after ten thousand trials, the odds that we will have found the black marble are so close to one hundred percent that any gambler, no matter how timid or how dumb, would gladly bet on the chances of the black marble being found before the ten thousand trials are over. Thus, even if that small black marble is lost in the box among a thousand marbles, by resorting to a large number of trials we have increased our chances of finding it almost to mathematical certainty

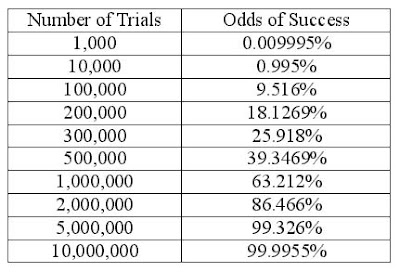

Perhaps the problem was just too easy. What if we throw the black marble into a box containing one million marbles? Then our chances of drawing out a black marble on a single trial would be only one in a million. Wouldn’t this make it impossible for us to find the black marble, regardless of the number of different attempts? Not if we throw in large numbers. After the first one thousand trials, the probability of finding that single black marble lost in a box containing one million marbles is:

P = 1 - (0.999999)1000

P = 0.0009995

This is a rather small probability, in spite of the fact that we have thrown in one thousand trials. However, not to be discouraged, let us increase without bound the number of trials. Then we can see our chances of finding that black marble improving dramatically as the number of trials goes up:

Thus, even though the black marble is now lost in a box containing one million marbles, by the mere trick of resorting to large numbers we have again raised the chances of finding it almost to mathematical certainty. Notice again that the odds definitely begin to turn in our favor when the number of trials approaches or exceeds the total number of possible ways in which the black marble may be found inside the box (one million in this case, since there are one million marbles inside the box). No matter how small the probability may be, the sheer power of large numbers has the capacity to make many very improbable events not just very probable, but almost certain to occur. Similar arguments have been resorted to when trying to justify the possibility of extraterrestrial life on the grounds that any event that has an infinitesimally small probability of occurring will indeed happen provided that the number of trials is sufficiently large, and on a laboratory as large as the Universe itself operating throughout with the same physical laws as the ones that gave rise to life on Earth, the sheer power of the many billions and billions of galaxies and stars and planets involved will make the appearance of life elsewhere almost a certainty.

The tactic of resorting to large numbers to make unlikely events happen can be traced all the way back to the Roman philosopher Lucretius, who wrote the poem On the Nature of the Universe, attempting to explain the universe in scientific terms with the purpose of freeing people from superstition and allay fears of the unknown. Indeed, he went as far as using the argument to defend the possibility of extraterrestrial life. We can read on his poem the following excerpt:

“Granted, then, that empty space extends without limit in every direction and that seeds innumerable in number are rushing on countless courses through an unfathomable universe under the impulse of perpetual motion, it is in the highest degree that this earth and sky is the only one to have been created.”

Lucretius, who in this respect was way ahead of his time, perhaps would have been most successful in procuring funding for a SETI program if only two thousand years ago the Roman Empire could have had the technology we have today. Anyway, the basic argument remains the same to this day: Given an infinite amount of material ceaselessly combining and recombining during an infinite amount of time all the possibilities and configurations that are allowed under the laws of Nature will occur and recur without end.

However, we now know that the Universe has not had an infinite amount of time available to try out every possible combination. In fact, the Universe is relatively young, and has not existed for more than some 15 billion years according to our most recent theoretical calculations and astronomical observations. Though 15 billion years may seem like a long time, much of that time has been consumed in the formation of galaxies and stars, and it is doubtful any life form could have had any chance to arise in the middle of interstellar dust (at least life as we know it). The only large quantity available to “beat the odds” is the seemingly “infinite” amount of material that is dispersed across a very large space already compacted into usable planets or satellites, and it is there where we can find the large numbers and put them to use. But even then, resorting solely to the power of large numbers to make the emergence of any form of life possible is just not enough. A more detailed calculation of the odds for life appearing anywhere by resorting to large number stratagems such as evolution still comes up extremely short, and recent discoveries from the modern science of chaos point to the fact that the only way the odds can be beaten is for the basic phenomena that allow life to evolve to be nonlinear (more about this will be said on a later chapter). Fortunately, many features of our Universe are nonlinear, and it is this extraordinarily lucky coincidence (?) that allows us to be here today communicating with each other. Indeed, if we were designing a Universe all to our own with the hopes of seeing life evolve, we would have to “build in” the capability for non linearity; otherwise life will most likely never evolve regardless of how long we may be willing to wait, since the odds become extremely dismal even with very large numbers thrown in.

Going back to our original experiment, where we tried to form the word “HOUSE” drawing each of the letters at random, let us now try a different approach. Instead of us picking out each of the five letters from a box, let us have a computer carry out the task for us. In so doing, we will allow each letter to be picked out at random from the English alphabet consisting of 26 letters. In addition, we will allow the computer to consider picking out the character at random also from a numeric keyboard consisting of the digits zero to nine. Thus, our alphanumeric “random soup” can give us at random one character out of 36 different possibilities. In addition, we will add two more characters available from the keyboard: the “space” character used to separate words and the “Enter” key (in old typewriters this key corresponds to the “carriage return”). Then, each time we let the computer pick out a character at random it can do so from among 38 different possibilities. In principle, the experiment could also be carried out by letting loose a monkey inside a cage containing an old mechanical typewriter, waiting for the monkey to push at random any of the 38 different keys of the typewriter, but the computer has the added advantage that it can compare automatically each combination of five words, and once a combination is found to be an exact match for the word “HOUSE”, the computer can be programmed to stop and print out the final tally with the total number of attempts before hitting correctly upon the word “HOUSE”. We will allow the computer to gather five characters in each trial, and if just one of the characters differs from the characters required to form the word “HOUSE”, the trial will be considered a failure, whereas once the correct match is obtained the trial will be considered a success.

The odds of picking out correctly the first character are one in 38, since only one of the characters (the letter “H”) will be an exact match for the first letter required to form the word “HOUSE”. Likewise, there are 38 different ways in which the second letter can be picked out, and only one of them will be the correct one. Thus, there are a total of 1444 different ways in which the first two letters can be picked out, and only one way in which the correct sequence of the first two letters will be selected. The odds of picking out correctly the first two characters are thus one in 1444. It is not hard to see that there are a total of

38 • 38 • 38 • 38 • 38 = 79,235,168 different ways

in which a five-letters word can be formed (this the reason of why any respectable English dictionary will be huge, although many of the possible combinations are sheer undefined nonsense that are not in use, at least not yet). Therefore, the odds that the computer will churn out correctly the word “HOUSE” are one in 79,235,168 or about 0.00000126%, close to one-millionth of one percent. Can we increase the odds by the usual trick of resorting to large numbers? We certainly can, and there are two alternatives available at our disposal:

- Repeat the experiment, with many other trials using the same computer.

- Carry out the experiment using many computers working at the same time.

In the first case, we may need a lot of time. In the second case, we will need a lot of computers. But either way, at least in principle we can raise the odds by pulling in the magic of large numbers. For the sake of argument, let us assume that we will repeat the experiment many times over using the same computer (this is perfectly feasible with the ultra fast personal computers of today). If we repeat the experiment ten thousand times, then the odds of hitting upon the correct combination of characters at least once will be:

P = 1 - (0.999999987)10000

P = 0.0001299

Our odds are now much better (relatively speaking), for there is now a 0.0126% chance that we will have gotten the word “HOUSE” after ten thousand trials, going from just one-millionth of one percent to about one-hundredth of one percent. And as we keep on increasing the number of trials the odds get even better and better, as can be seen from the following table:

Again, notice that the odds begin to turn in our favor once the number of trials approaches or exceeds the total number of possible combinations, about 80 million in this case. With about half-a-billion trials, the odds are definitely on our favor, and with one billion trials there is almost mathematical certainty that somewhere along the way we will have obtained the word “HOUSE”. The power of large numbers seems to be almost immense. However, there are some practical limitations when resorting to this gimmick, and its shortcomings will now be exposed out into the open.

Instead of just forming a simple six-letters word, let us go for something a little bit more ambitious. Let us try to obtain from the computer, by drawing out characters entirely at random, the following phrase:

THE HOUSE ON THE PRAIRIE

This phrase consists of 24 characters (including the spaces between each word). Let us now evaluate the total number of different possible combinations of character strings consisting of 24 characters drawn at random from an alphanumeric “random soup” of 38 characters. We can obtain this number by multiplying 38 by itself a total of 24 times. This yields the following number:

(38)24 = 82,187,603,825,523,214,603,738,912,597,460,647,936

= 8.21876 • 1037

where we have shortened the representation of such a huge number by means of scientific notation. [The number 1037 means a one followed by 37 zeros, whereas a number such as 10-25 means a decimal point followed by 24 zeros followed by a one.] Just by looking at this number, almost immediately we may begin to sense that something is not right, that things are no longer as simple as they appeared to be. Indeed, the task of using a computer to obtain on a purely random basis a phrase as simple as “THE HOUSE ON THE PRAIRIE” now appears to be way beyond reach. For if we are to use a digital computer, we must bear in mind that every computer that will ever be built in this Universe, now matter how fast, will always require a certain amount of time to carry out each one of its operations. Recall that in all of the previous cases we have studied so far, in order to turn the odds in our favor we need to carry out a number of trials that will either approach or exceed the total number of ways in which the random event can be carried out. Assuming that the computer requires about one second to build each “random” phrase of 24 characters and within this time frame it must also compare the phrase against the “standard” phrase to determine if it has struck gold, then we will have to wait about 8.21876 • 1037 seconds before we have any reasonable hopes of having obtained the desired phrase, generated completely at random, from the computer. The brutal fact is that if that computer had started doing this task since the very moment our Universe was born, it would still be hard at work and would not have covered in all this time even a minute fraction of the number of trials required to turn the odds in our favor. Indeed, if we put an upper estimate on the age of our Universe at about 15 billion years (which is in agreement with the data we have at hand at this point in time), then our Universe is no older than about

4.6656 • 1017 s = 466,560,000,000,000,000 seconds

Compare this number, the age of our Universe, with the time required for the trials carried out in the computer to approach or exceed the total number of possible combinations, at one second per trial:

82,187,603,825,523,214,603,738,912,597,460,647,936 seconds

The computer, working non-stop since the day our Universe was created until today, would not have covered even

0.000,000,000,000,000,000,568%

of the total number of trials required to turn the odds in our favor. For all of its hard work since the Universe was created, the computer would not have made even a tiny dent in turning the odds in our favor.

Purists might argue that a computer carrying out each “build-phrase-compare-phrase” operation at one second per operation would be much too slow by today’s standards. But even if we had used a faster computer carrying out each operation at the rate of one microsecond per operation instead of a full second (the faster speed will allow one million different strings of 38 characters each to be generated and compared against the target string each second), the computer would not have covered even

0.000,000,000,000,568%

of the total number of trials required to turn the odds in our favor. And a computer this fast already has a decent speed by today’s standards for personal computers. Even if we summoned all of the information processing power locked up in IBM's powerful

Blue Gene computer:

the computer would not even make a small dent in beating the insurmountable odds.

As a last resort, we could try using many computers instead of a single computer in order to raise the odds, all of them starting to work since the day the Universe was born. As soon as one of the computers had generated successfully (at random) the string “THE HOUSE ON THE PRAIRIE”, it would relay to the other computers a message telling them that the search was over, and then all the computers would come to a halt. This is a perfectly valid approach; we can either use a single computer to generate one thousand different random trials or one thousand different computers to generate each one a single random trial. But even then, we would still come up short, for if we use one billion computers with every computer carrying out each operation at the rate of one microsecond per operation, with all the computers hard at work since the very moment the Universe was created, this vast array of computational power would not have covered even

0.000,568%

of the total number of trials required to turn the odds in our favor. Clearly, we need more computers for the task. If we use

one trillion computers, all working since the day our Universe was born, the probability would not rise beyond 0.568%, or one-half of one percent. [

Strictly speaking -for those with a penchant towards mathematical rigor-, adding three, four or n more additional computers will not simply triple, quadruple, or increase by n times the probability of hitting upon the desired combination of characters, for there will be an unavoidable overlap of identical combinations being generated at different times among the different machines, resulting in a decreased efficiency due to the missed opportunities these repeated identical combinations of characters represent, and thus the probability will not increase by a factor of n when n machines are added to the task; the rise will actually be lower. However, we can cast aside these considerations, since a more precise -and much more complex mathematical analysis- will not alter the main conclusion we are trying to reach.] However, this is easier said than done, for this much computational power may be unattainable at least for the next five hundred years, even assuming spectacular breakthroughs in technology such as the proposed

quantum computer. [

Assuming we may be able someday to use each individual atom to store and process bits of information, there is an upper physical limit to the amount of storing and processing that can be carried out, set by the limitation on the number of quantum states in a bounded region that an individual atom can have. This upper limit is what is known today as the Bekenstein bound, an absolute upper limit on information density considering each quantum state of an atom is capable of encoding one bit of information.]

So far, we have only covered the problems involved in trying to get from a computer churning out hundreds of thousands of characters per second on an entirely random basis the simple phrase “THE HOUSE ON THE PRAIRE”. What if we now try to get from the same computer, working still on an entirely random basis, a full printout of a work like Hamlet consisting perhaps of something like 500,000 characters? As you might have guessed, the total number of combinations, given by the number (38)500,000, is a number so huge that we might as well take it to be infinity, a number so huge that it is far beyond the reach of a fast gigantic digital computer even if a computer as big as the observable Universe had been built solely for that purpose. A task such as this one, of incredible exponential difficulty marshalling such large numbers that no computer here on Earth can print those numbers if full, lays to ruins the magic that large numbers could have done for us in trying to beat the odds and turn them on our favor. Indeed, this is the straw that breaks the back of the camel of large numbers. We are led to conclude, forced by the sheer weight of the overwhelming evidence, that if we come across a computer printout containing the full text of a work like Hamlet, then that work could not possibly have been produced on a random basis by any computer here on Earth, however big, however fast. This conclusion is so important that it will be emphasized again for the reader:

There is no natural random process in the entire Universe capable of producing a work like Hamlet.

In order for a work such as Hamlet to be produced, a prerequisite or initial condition is the existence of a powerful mind such as the mind of William Shakespeare. But this is not enough. Another prerequisite is the prior existence of an alphabet that can be craftily manipulated by a writer to produce a good piece of work. The alphabet is the other initial condition that will be manipulated by the creator to accomplish his/her purpose, and in this case it is the species Homo sapiens that has created the initial condition known as the Roman alphabet as a means of communication to convey the story and the ideas contained in Hamlet. Without the coming together of these initial conditions, there will be no Hamlet. And these are not the only initial conditions that must be fulfilled in order for Hamlet to come into being. Initial conditions that would allow a life form by the name of William Shakespeare to be produced and sustained in a planet inside our vast universe are also a necessary prerequisite.

From this chapter we can draw two important conclusions: first, it may be possible for us to tell from the characteristics of an object whether the object was created by Nature as a result of completely random phenomena or whether is was the result of careful planning by an intelligent being; and second, the initial conditions needed to create something may be considered themselves to be part of the original act of creation by the planner who has decided to produce the target object regardless of how long ago the object was produced. For our purposes, as we study the evidence or the footprints left behind, the passage of time is irrelevant; unless time has made it impossible for us to trace back and figure out the initial conditions from whatever remnants those initial conditions would have left behind.